AI Adoption Framework: Phase 1, Minimalist

Navigating the Gartner hype cycle in individual AI adoption and building agent-enabled codebases

In my previous post, I introduced a three-phase framework for AI adoption for engineering teams. This post focuses on individual adoption, where engineers augment existing workflows with AI tools. It also explores the reality of this phase through the lens of the Gartner hype cycle and introduces agent-enabled codebases as a concept.

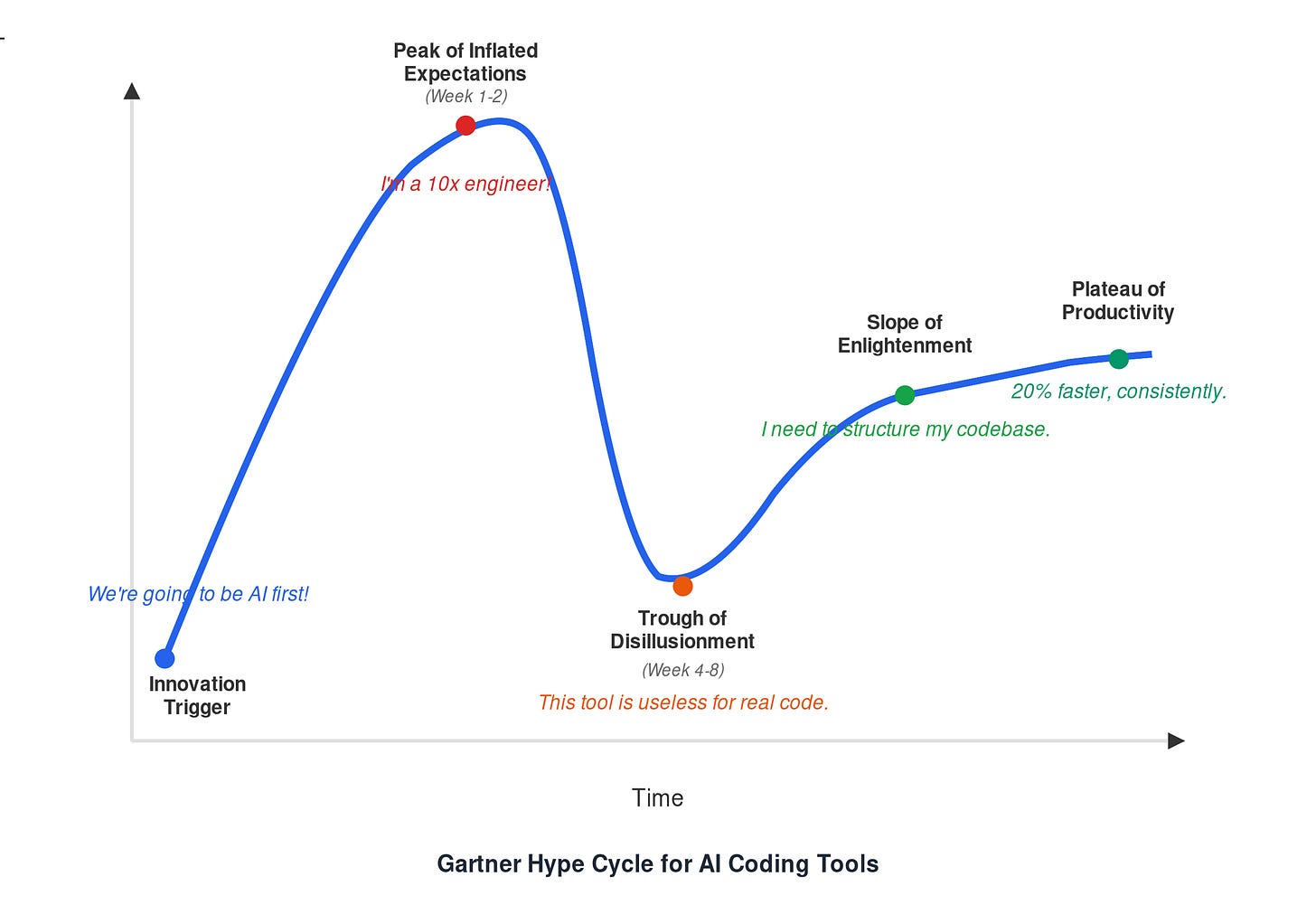

Understanding the Gartner Hype Cycle

The Gartner Hype Cycle is a graphical representation of the maturity, adoption, and social application of emerging technologies. It describes five key phases that new technologies typically experience: Innovation Trigger, Peak of Inflated Expectations, Trough of Disillusionment, Slope of Enlightenment, and Plateau of Productivity.

For AI coding tools, this cycle plays out in a compressed timeframe of weeks instead of years. Understanding where you are in this cycle is critical for managing expectations and navigating the adoption process effectively.

Engineering Reality

Most engineering teams follow predictable stages when adopting new technologies and tools which can be mapped to the Gartner Hype cycle with a high degree of accuracy. I’ll try to map this specifically for the adoption of AI tools. By this time, there’s already a mandate to be “AI First” for the entire organization and engineering teams are expected to lead the way on this.

Peak of Inflated Expectations (Week 1-2)

Engineers discover Claude Code, Cursor, or similar tools. They find examples on Reddit and GitHub showing miraculous productivity gains. They start using these tools exactly like they use Claude UI or ChatGPT: short, transactional interactions without leveraging agentic features or customizations. “Write me a function that does X.” The tool complies. “What does this project do?” Get a good summary to populate CLAUDE.md. “Write tests for this file/function.” Done while you get your coffee. Initial wins feel transformative. Engineers look forward to finally working on real problems rather than writing documentation, integration suites or good commit messages.

Trough of Disillusionment (Week 4-8)

The promised future remains elusive. Initial euphoria is replaced by a wide array of annoying problems. The PRs are now much longer than earlier and the sheer volume has gone up, thanks to all the vibe coded features. As much as tools like Coderabbit, Cursor Bugbot, Copilot help you out, it’s your responsibility to click the big green merge button. Which means you still need to go through the wall of text which leaves less time for solving the real problems you thought you would. Even when you do work on your tasks, Claude Code keeps compacting without finishing up a simple bug fix. In the not-so-deep corner of your heart, you know it would’ve taken you an hour to fix. You start searching for queries like “How to use Claude Code with large codebases” to make it suck less. Teams need an average of 11 weeks to fully realize AI tool benefits. A METR study found developers actually became 19% slower when using AI tools poorly. The promise breaks.

Slope of Enlightenment (The Breakthrough)

In spite of the chaos, a few team members gain speed. They’re doing something differently.

Turns out, they’ve consistently adapted the tool to work for them instead of changing their behavior. They’ve found the path where they augment their strengths while carefully managing quality and velocity expectations.

You decide to roll up your sleeves. Engineers stop blaming the tools and start adapting their approach. The problem isn’t the tool’s capability—it’s how they’re using it and structuring their work.

The breakthrough comes from two shifts: building personalized context and creating agent-enabled codebases.

Symptoms: Are You Stuck in the Trough?

In addition to the examples I’ve provided, here are a few more symptoms of engineers being stuck in this stage:

AI generates code that doesn’t follow your team’s patterns or standards

You repeat the same context in every conversation

The tool suggests solutions that ignore existing architecture decisions

Context windows fill up before completing meaningful work. Frequent

compacting.You blame the tool: “It can’t handle my codebase’s complexity”

Your 100k LOC repo overwhelms the AI, producing generic or wrong answers

These symptoms indicate you’ve addressed surface-level automation but haven’t structured your workflow for sustained AI collaboration. With a massive monorepo, our engineers faced issues related to context window, enforcing standards, and not using the right models for the tasks.

The Critical Breakthrough: Agent-Enabled Codebases

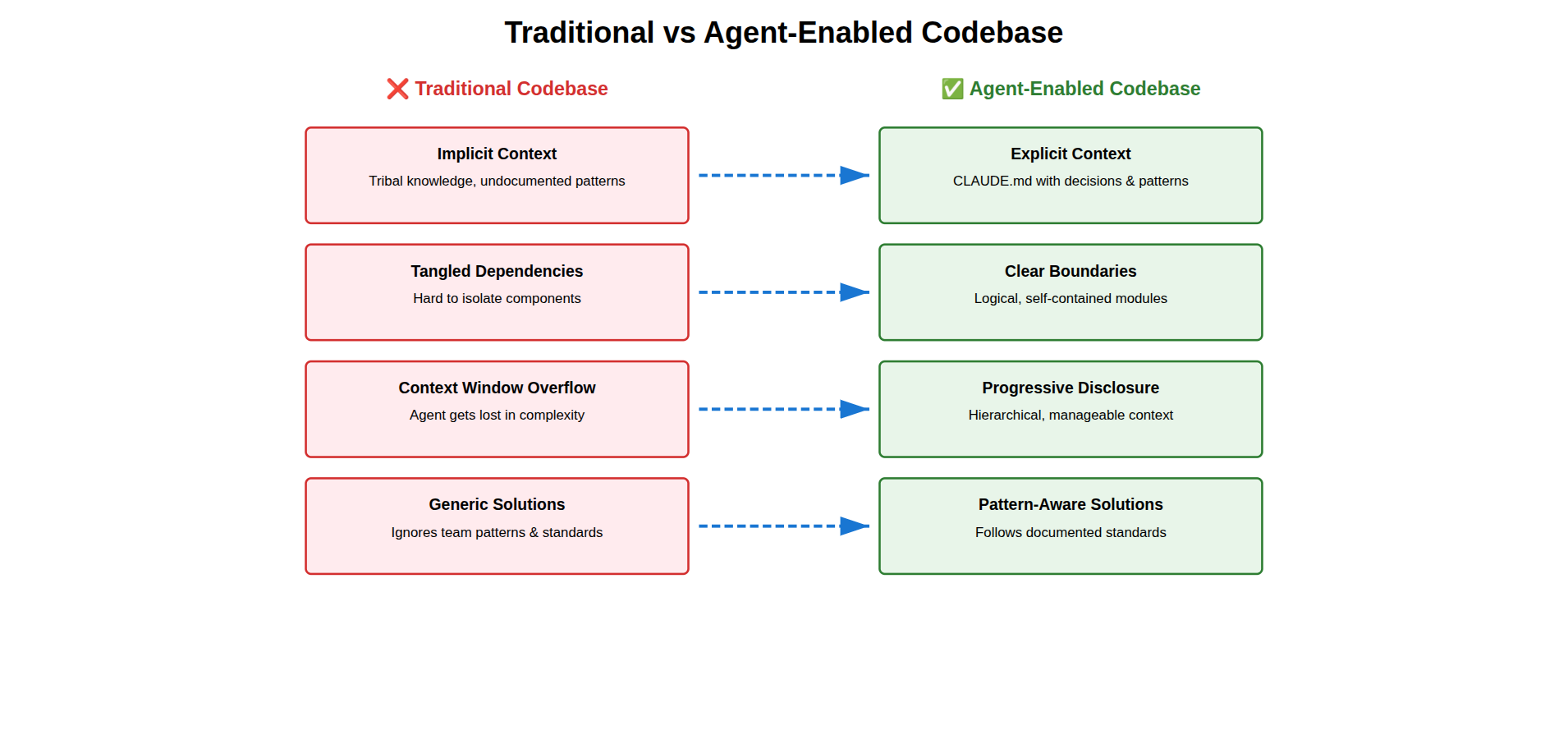

Here’s the insight most engineers and teams miss: Your codebase wasn’t designed for agents to work with it.

Traditional codebases optimize for human developers. Humans navigate complexity through accumulated knowledge, tribal wisdom, and informal documentation. They understand implicit context. Agents don’t have this luxury. They need explicit structure, clear boundaries, and logical decomposition.

An agent-enabled codebase is structured so both humans and agents can work effectively, with the explicit goal of letting agents handle most routine work. This doesn’t mean rewriting everything. It means restructuring how information is organized and accessed.

You might think: “We can’t refactor our codebase just for AI tools.” You’re right but you’re already refactoring for human onboarding, for testing, for deployment. Agent-enablement isn’t a new goal. It’s a forcing function for engineering practices you’ve deferred.

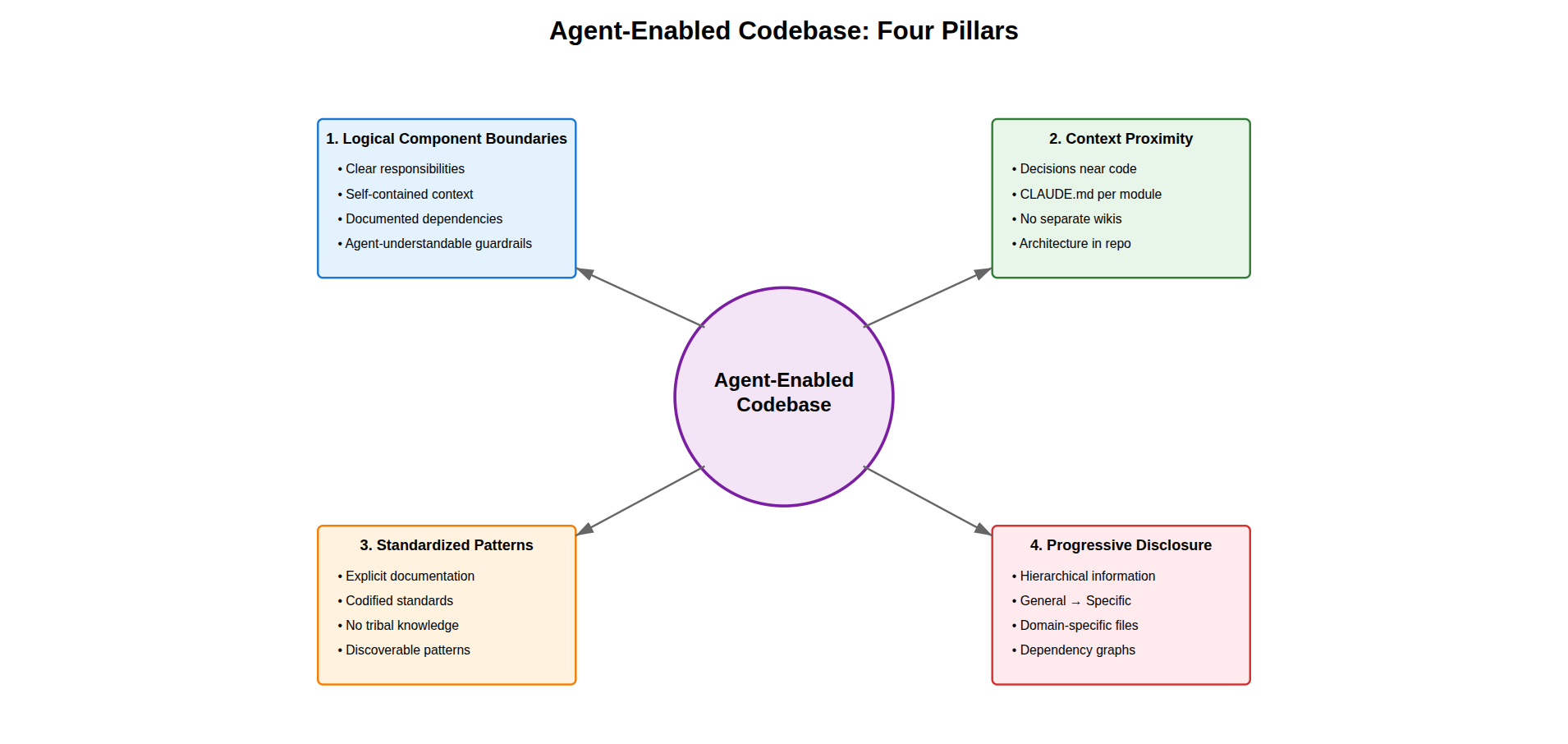

What Makes a Codebase Agent-Enabled?

Logical Component Boundaries: Break your monolith into well-defined modules. Not for microservices, but for comprehension. This is true for microservices as well. The objective of this is create agent understandable boundaries that provide guardrails for them. Each component should have:

Clear responsibility

Explicit, documented dependencies

Self-contained context that fits in a single AI conversation

Context Proximity: Place relevant context near the code. Document architectural decisions in the module they affect, not in a separate wiki. Agents work best when context and code live together. Use CLAUDE.md to document code decisions, high level architecture, communication interfaces and quality gates.

Standardized Patterns: Codify your standards explicitly. Don’t rely on “everyone knows we do X.” An agent doesn’t know until you document it. API standards, error handling patterns, testing approaches—make them discoverable. Again, document these in a project wide memory or local memory. As long as this is part of all decisions, you’ll get better responses.

Progressive Disclosure: Structure information hierarchically. High-level architecture at the root, implementation details in subdirectories. Agents can navigate from general to specific without loading your entire codebase. Create a dependency graph by referencing modules in CLAUDE.md file. Engineers already know how this dependency graph is built, it’s just not documented anywhere for the agents to use.

An example of what we did: Our API development standards are in Confluence. Claude Code won’t know they exist. The solution: We installed Atlassian MCP server and create a CLAUDE.md file that references these standards for all API-related work. Now the agent follows org guidelines automatically.

Or take a complex project with multiple domains. Instead of one massive CLAUDE.md, create CLAUDE_AWS.md for cloud configurations, CLAUDE_TESTING.md for testing strategies, CLAUDE_API.md for API patterns. Each provides focused context for specific work.

Building Personal Context and Memory

While restructuring your codebase, build personalized memory in parallel:

Bootstrap with

/init: Use Claude Code to generate initial CLAUDE.md, then refineBe specific: “Use 2-space indentation” beats “Format code properly”

Domain-specific files:

CLAUDE_TESTING.md,CLAUDE_SECURITY.md,CLAUDE_DEPLOYMENT.md

Success Metrics

Since all the investment and effort in AI coding tools need to be quantified, here are a few metrics that you can use to track the impact. My recommendation is to start with one or two metrics and track more only if needed.

Sprint velocity increases measurably

Lines of code per engineer trends upward

PR quality improves (fewer review cycles)

Context reuse increases (less repetition)

Teams need 11 weeks to fully realize benefits—research confirms this timeline. Don’t expect overnight transformation. Plan for gradual adoption with ongoing support.

Takeaways for Individual Contributors

Personalize, don’t copy: Reddit examples won’t work for your codebase. Build YOUR workflow. Give rich and accurate context to your global and project level memory files.

Structure for agents: Break down complexity into logical components agents can understand.

Build explicit memory: Document standards, patterns, and decisions where agents can find them.

Use advanced features: Move beyond autocomplete to multi-file orchestration and context management.

Measure progress: Track time saved, quality improvements, and context reuse. Break tasks into smaller chunks that can be completed within a single context.

Takeaways for Leaders

Set expectations: 11 weeks to full value realization. Provide patience and support.

Enable experimentation: Give engineers time to learn, fail, and refine their approaches.

Focus on structure: Help teams create agent-enabled codebases, not just tool adoption.

Share this framework: Guide ICs through the hype cycle with concrete examples.

Measure team-wide: Track sprint velocity, PR quality, and cross-team consistency as leading indicators.

Next Steps

When 80% of your engineers consistently save 10-20% time daily and sprint velocity shows sustained improvement, you’re ready for Phase 2: Collaborative. Individual gains transform into team-wide efficiency through codified expertise. That’s where the real compounding begins.

Navigating AI adoption challenges or building agent-enabled codebases? I’m happy to discuss strategies or share what’s worked for my teams. Also available for talks on AI adoption frameworks for engineering organizations.